UK’s Age Appropriate Design Code (Children’s Code): Full Compliance Guide

Children today spend most of their time online. That’s why safeguarding their privacy and ensuring their safety have become critical goals for responsible adults. The UK’s Age Appropriate Design Code, commonly referred to as the Children’s Code, is a regulation created to tackle issues related to children’s safety online. Launched by the Information Commissioner’s Office (ICO), the Code establishes a new benchmark for how online services must handle children’s data. In this article, we’ll examine what the Age Appropriate Design Code is, the reasons behind its implementation, and its implications for businesses and children in the UK.

What Is the Age Appropriate Design Code?

The Age Appropriate Design Code (AADC) is a statutory code of practice that lays out clear guidelines for any online service that children under 18 might use. First rolled out on September 2, 2020, it gave businesses a year to get their activities in order before becoming fully enforceable in September 2021.

The Age Appropriate Design Code operates under the Data Protection Act 2018. At the core of the Code are 15 design standards built around one simple principle: when you’re designing online services, children’s well-being must come first.

So, who does this affect? Pretty much everyone in the digital space: from websites and apps to social media platforms, online games, and even internet-connected toys. If a child could reasonably access it, the Code applies.

Why was the Code Implemented?

Before 2020, the internet reminded of the wild west for adults and children alike, and with no sheriff in sight. Although the internet has always been a fantastic place for learning and connecting, it also has a darker side where the data of its youngest users can be used as a profitable commodity.

For far too long, children’s data was being collected, shared, and analyzed at an unprecedented scale, often without their understanding or consent. Social platforms, games, and apps used persuasive design and invasive tracking to fuel engagement and advertising profits, leading to invasive data collection practices. And we’re not talking just about targeted ads. Online services used to build detailed profiles on children’s habits, preferences, and even their locations.

Given the proliferation of all these wild practices, the motivation for the UK’s Age Appropriate Design Code (the Children’s Code) was clear – to rein in the growing data exploitation and protect children from online harms that were becoming increasingly prevalent.

First, it was the General Data Protection Regulation (GDPR) enforced in 2018 that laid the foundation for stronger privacy and data protection rules. Yet, it didn’t go far enough to address children’s specific vulnerabilities in the digital world. And in 2019, the UK’s Information Commissioner’s Office (ICO) recognized this gap and developed the draftAADC to shift online design toward the child’s best interests.

Introduced in 2020 and enforced from 2021, the Code marked a turning point: it set clear, practical expectations for tech companies to protect children’s rights by design, based on the underlying goal of making the digital world safer, fairer, and more respectful for young people.

The UK’s AADC also had a global impact: it became the blueprint for California’s next privacy law protecting minors. Thus, the California Age Appropriate Design Code Act (CA AADC), which applies to businesses defined by the California Consumer Privacy Act (CCPA), was modeled heavily on the UK’s AADC.

The 15 Standards of the Age Appropriate Design Code

The Age Appropriate Design Code outlines 15 standards that all online services must follow when they’re building platforms and gathering data from children.

| Standard | What it means | Compliance example |

| Best interests of the child | Requires online services to consider what’s best for the child when designing and operating online services. | A platform prioritizes child safety over ad revenue decisions. |

| Data Protection Impact Assessments (DPIAs) | Assess and document risks to children before launching a service or feature. | Conducting a DPIA before introducing a new chat tool for under-18s. |

| Age-appropriate application | Tailor privacy and safety features according to the user’s age group. | Showing simplified privacy explanations for users under 13. |

| Transparency | Explain data practices clearly and accessibly – no jargon. | Using short videos or icons to explain how data is collected. |

| Detrimental use of data | Don’t use data in ways that are harmful or unfair to children. | Not using engagement data to push addictive content loops. |

| Policies and community standards | Follow your own published rules and moderate content responsibly. | Removing inappropriate posts in line with community guidelines. |

| Default settings | Set privacy to “high” by default for child users. | Public profiles are off by default. |

| Data minimization | Collect and retain only the minimum data necessary. | Only storing email and username, not full birthdate or location. |

| Data sharing | Don’t share children’s data unless there’s a compelling reason to do so. | Avoiding third-party advertising trackers on kids’ accounts. |

| Geolocation | Turn geolocation off by default and make it obvious when it’s on. | A visible “location on” indicator in an app’s interface. |

| Parental controls | Be transparent about parental monitoring and respect children’s privacy as they mature. | Showing a clear alert when parents can view activity logs. |

| Profiling | Switch profiling off by default; only enable with a clear, child-friendly explanation. | Not using algorithms to recommend ads to under-18s. |

| Nudge techniques | Avoid design tricks that encourage poor privacy choices or overuse. | No “accept all” buttons that are more visually appealing than „manage settings”. |

| Connected toys and devices | Apply the same data and safety standards to physical devices. | A smart toy stores no audio data after interaction ends. |

| Online tools | Provide clear tools for children to exercise their data rights. | Easy “delete my data” or “turn off tracking” buttons in settings. |

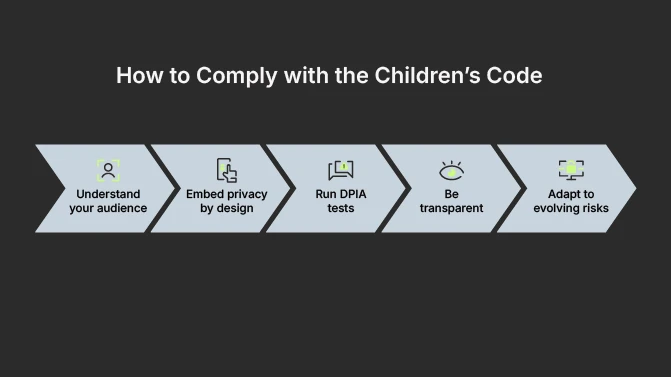

How to Comply with the Children’s Code

The UK’s Age Appropriate Design Code (Children Code) challenges internet service providers to think beyond compliance and embrace a child-first mindset in everything they design, build, and launch, especially when it comes to creating digital experiences. Complying with the Code means consciously protecting young internet users from invasive data collection and tracking.

Know Your Audience and Their Age

The first step is figuring out if your service is likely to be accessed by children. If the answer is yes, the Code applies. You need to implement robust, well-documented measures to assess and, where appropriate, verify the age of your users. If you can’t be sure, the Code says you should treat all users as children and apply the highest safeguards; this way you’ll remove any risks and show responsibility.

Embed Privacy by Design

The Code demands that all online services should be „privacy by default” for children, which means turning off unnecessary data collection and sharing features. It’s mandatory to run Data Protection Impact Assessments (DPIAs) before features go live. Therefore, you should stop collecting location data automatically, turn off those excessive, intrusive notification settings, and only collect the personal data you absolutely need to provide the service.

Be Transparent and Easy to Understand

To speak their language, you must ditch legal jargon. Your terms and conditions need to be clear, concise, and prominent, so that even children would understand them. Use plain language, creative designs, and tools like short videos or cartoons to explain exactly what data you collect, why you collect it, and what children’s rights are. Also, always be upfront about parental controls.

Stay Accountable and Adapt

Compliance with the Age Appropriate Design Code means that you should constantly be accountable for your actions and adapt to the future changes in regulations or new technologies. Risks never disappear; they just evolve together with the new technological innovations. So, you must keep your children safety guards up at all times.

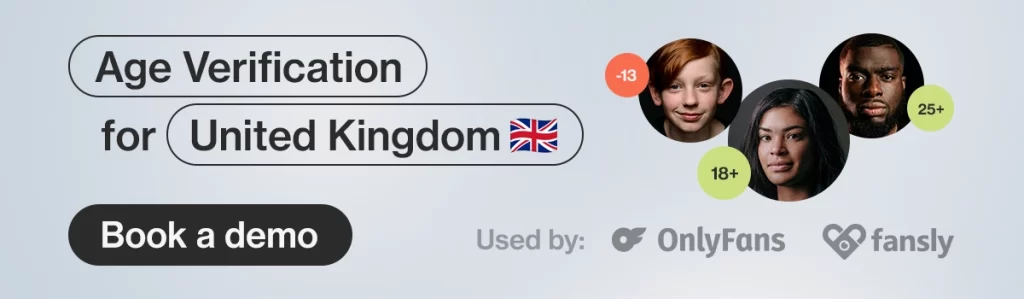

Age Assurance vs. Age Verification

Understanding the distinction between these two terms is essential because it is the privacy-respecting core of the Children’s Code. In particular, the Code insists on this distinction primarily to ensure data minimization and proportionality when handling children’s data.

Age Assurance is a broad, smart assessment of somebody’s age. This method uses techniques like asking for a simple date of birth, analyzing non-personal data, like a device’s typical usage patterns, or employing biometric age estimation, like analyzing a face without requiring documents.

Its goal is to make a reasonable judgment about a user’s likely age, while preserving their privacy. Age assurance aims for data minimization and often doesn’t require hard proof of identity. Therefore, it’s used by low-to-medium risk platforms, or as a first step on any online platform.

Age Verification, on the other hand, is the definitive, proof-based identity check, during which a user should bring in the official documentation. Its goal is to externally verify the user’s age with a high degree of certainty, typically using government-issued ID, a national identity number, or a bank-verified status.

Age verification provides high assurance, often used for access to age-restricted or high-risk content. That’s why it’s used by high-risk services where legal or significant harm prevention is paramount, especially for adult content. The Code doesn’t demand full-blown verification for every site but rather asks you to match the level of assurance (low, medium, or high) to the risk of harm your platform may pose to children’s online activities.

| Age Assurance | Age Verification | |

| What it is | A general assessment of likely age | A proof-based identity check |

| Privacy impact | Low (Minimal data collection) | Higher (Requires official documents/data) |

| Assurance level | Low to Medium | High |

| Example | Asking for a date of birth; facial age estimation | Checking a photo ID; bank-verified age status |

Report: Age Verification Regulations Around the World

How the Code Relates to the UK’s GDPR and the Online Safety Act

The UK’s GDPR is the base that outlines the broad principles for the AADC, stating that you must collect people’s data fairly, you must minimize what you collect, and people have rights over their data.

Meanwhile, the AADC (or the Children’s Code) is the „how-to guide” that takes those abstract GDPR rules and makes them specific to children’s online activities. In other words, it tells you exactly what „fair and lawful data processing” looks like when your user is under 18.

The Code’s 15 standards, such as „privacy by default,” „data minimization”, and „no nudging”, are simply the ICO’s instruction manual for achieving GDPR compliance when children are involved. This, in turn, means that when you comply with the Code, you’re confidently meeting your GDPR obligations for your youngest users. For example, if GDPR says you need to be transparent about what data you collect, the Code says that transparency must be in clear, age-appropriate language, like having cartoons instead of the legal text.

As to the Online Safety Act (OSA), it introduces a regulatory framework focused on content safety, especially protecting children from harmful or age-inappropriate material when they use online platforms in the UK, such as search engines, social media, user-to-user services.

Thus, under the OSA, providers of certain online services “likely to be accessed by children” must carry out risk assessments, implement age assurance or age-checks, to moderate harmful content, such as pornography, self-harm, or violence, and comply with codes of practice set by Ofcom.

To sum up: while the Children’s Code focuses on how children’s (sensitive) personal information is handled, the OSA focuses on what children are exposed to and how service providers ensure that exposure is managed safely.

Common ICO Findings and Mistakes to Avoid

The most common findings by the UK Information Commissioner’s Office (ICO), particularly in audits related to the Age Appropriate Design Code (Children’s Code), revolve around a failure to implement „high privacy by default” for child users.

You should be aware of the key mistakes and compliance flags you should avoid, if your content may target children:

| Mistake to avoid | ICO finding | Compliance standard |

| Profiling by default | The service is set to automatically profile children for targeted ads or content without any user action or clear consent. | Profiling should be OFF by default. The platform must demonstrate a compelling reason that profiling is in the child’s best interest to be on. |

| Geolocation left ON | Precise geolocation tracking is automatically active when a child uses the service. | Geolocation must be OFF by default. If location is necessary, it must be approximate, and tracking that is visible to others must revert to OFF after each session. |

| Dark-pattern nudges | The use of visual or design tricks, such as colorful graphics, pressure text, confusing buttons, to nudge children into sharing more data or lowering their privacy settings. | Nudge techniques must be used only to promote high privacy options or healthy behaviors, not to pressure children to waive their rights. |

| Unclear privacy notices | Policies and terms are overly long, complex, or buried in technical language, making them impossible for children or young people to understand. | Transparency requires information to be concise, prominent, and in clear language suited to the age of the child. Services must provide „bite-sized” explanations when data use is activated. |

What AADC Means for Children, Parents, and Businesses

The UK Children’s Code is the new standard for children’s digital well-being, making online service providers design services that protect children automatically. This law brings real benefits for children, their parents, and the businesses too.

A Safer Digital World for Kids

Children today are digital natives, but that doesn’t mean they know how to avoid data traps and protect their privacy. The internet is still a risk-prone space. To put guardrails in place and create a safer space, the Age Appropriate Design Code ensures that apps, games, and platforms are built with children’s best interests at heart from day one. Features like default privacy settings, age-appropriate content, and transparent data practices mean kids can explore, learn, and play online without being exploited.

- The AADC is about creating spaces where curiosity doesn’t come at a hidden cost.

Parents’ Peace of Mind

Parents shouldn’t need a law degree to understand what happens to their child’s data. And the Children Code is set to bring clarity and control back into their hands. When platforms comply, parents can trust that their kids aren’t being tracked across the web, targeted with inappropriate ads, or nudged into sharing more than they should.

- The AADC transforms „I hope this is safe” into „I know my child is protected”.

Trust as a Competitive Edge for Businesses

When it comes to online service providers, avoiding fines and penalties is not the underlying motivation for them. Compliance with the Age Appropriate Design Code is businesses’ opportunity to build lasting credibility. Companies that embrace these standards signal that they’re responsible, forward-thinking, and worthy of trust. The business benefits of the Code also include reduced legal risk, and brands’ positioning as leaders who actually care about the communities they serve.

- In a market where data scandals regularly make headlines, being compliant with the AADC is a badge of honor.

Final Thoughts

Setting clear rules for how we protect kids online, the UK’s Age Appropriate Design Code puts children’s well-being front and center by treating their personal data with the respect it deserves. For businesses, the Code marks a genuine turning point in digital safety and goes beyond legal compliance. Companies that lean into the principles of the Age Appropriate Design Code take on a moral obligation to shape a safer, more trustworthy internet for the next generation that takes care of children’s mental and physical health. And, in the process, the Code-abiding companies are building something invaluable – a reputation for being responsible, ethical, and genuinely committed to the people they serve.