Georgia Age Verification Law: What Businesses Need to Know

Georgia’s “Protecting Georgia’s Children on Social Media Act” (SB 351) legislation introduces significant changes for social media platforms, minors, parents, and online content providers. Here’s a comprehensive overview of what the law entails, who it affects, and the implications for businesses and users alike.

When Does Georgia’s Age Verification Law Take Effect?

SB 351 was signed into law by Governor Brian Kemp on April 23, 2024, and is scheduled to become effective on July 1st, 2025. This allows a transition period for platforms and stakeholders to align with the new requirements.

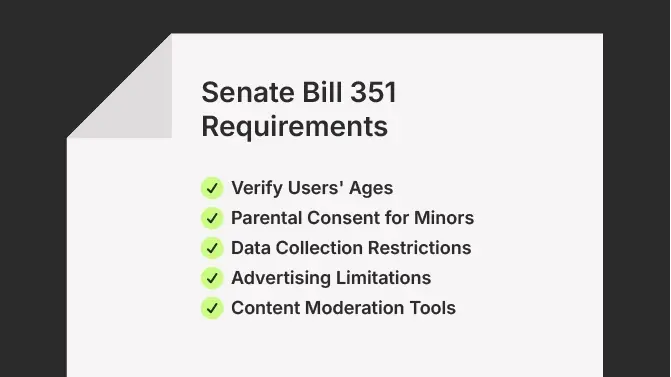

Key Requirements for Social Media Platforms

Under Georgia’s Senate Bill 351 (SB 351), also known as the “Protecting Georgia’s Children on Social Media Act,” social media platforms are subject to a series of stringent requirements designed to protect minors online. These obligations target user verification, data handling, parental oversight, and advertising practices. Here’s what platforms must do:

Age Verification Obligations

Platforms are required to make “commercially reasonable efforts” to verify the age of every account holder. This includes implementing technical measures that can accurately determine a user’s age, such as AI age estimation or requiring a date of birth upon sign-up. The standard for compliance may align with existing models like the NIST Identity Assurance Level 2 (IAL2) when platforms deal with adult content, requiring robust verification, including biometric or government-issued ID checks.

Parental Consent for Under-16 Users

If a user is under 16 years old, platforms must obtain express parental or guardian consent before allowing the child to create or maintain an account. Acceptable methods include submitting written consent, a toll-free phone call, videoconference approval, or verified digital forms.

Data Collection and Privacy Restrictions

SB 351 limits the collection, use, and sharing of personal information from users under 16. Platforms can only gather what is strictly necessary to provide the service and must disclose their data practices clearly. This echoes principles found in privacy-focused legislation like the California Age-Appropriate Design Code.

Advertising Limitations for Minors

The law bans targeted advertising to minors. Platforms may only display contextual ads, which are not based on browsing history, behavior, or other sensitive data. This move is intended to reduce exploitative or manipulative marketing directed at children.

Required Content Controls

Platforms must provide transparent descriptions of their content moderation features, including filtering, blocking, and reporting systems. Parents or guardians must also be granted the ability to manage these tools, enabling them to limit or disable certain features to protect their child’s experience online.

Who Must Comply With the Law?

Georgia’s age verification law, SB 351, primarily targets two categories of online services. First, it applies to social media platforms, defined broadly as services that allow users to create personal profiles, publish content, and interact with other users. This includes popular platforms such as Instagram, TikTok, Facebook, Snapchat, and any similar service where peer-to-peer communication is a core function. The law’s intent is to regulate spaces where minors may be exposed to potentially harmful or inappropriate content through unmonitored interaction. As reported by U.S. News, this part of the legislation reflects growing concern among lawmakers over the influence of these platforms on youth mental health and safety.

The law also applies to websites hosting material deemed harmful to minors. This includes adult content websites or any platform where a “substantial portion” of content falls under definitions of sexually explicit, violent, or otherwise inappropriate material for individuals under 18. Determining what constitutes a “substantial portion” is left somewhat vague in the legislation, raising potential questions for interpretation. However, legal experts, such as those at the Information Policy Centre, note that this wording is consistent with language used in other states’ laws and is likely intended to cast a wide net around adult content providers.

Importantly, certain entities are exempt from these requirements. The law explicitly excludes internet service providers (ISPs) and search engines, recognizing that these services do not directly publish or control user-generated content in the same way as social media platforms or content websites. This carveout is critical in avoiding unintended consequences for essential web infrastructure. Biometric Update and GPB News confirm that the law’s authors were deliberate in focusing regulation on content hosts and interaction hubs, rather than the broader internet ecosystem.

By establishing clear targets while exempting backend technologies, SB 351 aims to strike a balance between protecting minors and preserving general internet accessibility. However, critics argue the broad definition of “covered platforms” could lead to overreach or confusion in enforcement, a concern that is likely to be tested as legal challenges unfold.

Fines and Legal Consequences for Non-Compliance

Georgia’s SB 351 establishes clear financial and legal consequences for companies that fail to comply with its age verification and parental consent requirements. The law sets a civil penalty of up to $2,500 per violation for social media platforms or content providers that do not meet the mandated standards. Each instance of non-compliance, such as allowing a minor to create an account without parental consent, may be treated as a separate violation, which could quickly escalate penalties for larger platforms with high user volumes.

In addition to financial penalties, enforcement actions will be led by the Georgia Attorney General. However, enforcement is not immediate or automatic. The law includes a 90-day notice-and-cure provision, meaning that before legal proceedings can begin, the Attorney General must notify the company of the alleged violation and allow them three months to correct it. This provision offers businesses a buffer to come into compliance without incurring penalties, especially useful during the initial rollout period. These details are confirmed in analyses by KTS Law and reports by FOX 5 Atlanta.

The law is even stricter when it comes to providers of adult content. Any site that publishes or distributes material considered harmful to minors may face fines of up to $10,000 per violation. This high penalty reflects the legislature’s intent to deter exposure of explicit content to underage users and to encourage adoption of robust, privacy-respecting verification systems.

In sum, Georgia’s enforcement framework aims to balance accountability with opportunity for remediation, offering companies time to adjust, but imposing steep fines for those who ignore the law.

How Parental Consent Is Collected and Verified

A central component of Georgia’s SB 351 is the requirement for verifiable parental consent for any user under the age of 16. Social media platforms and websites that fall under the law’s scope must obtain express permission from a parent or legal guardian before allowing a minor to create or maintain an account. This obligation is not limited to age verification alone as it also includes giving parents meaningful control over their child’s online presence and exposure to content.

To meet this requirement, platforms must use verifiable and approved methods for securing parental consent. According to the bill and legal analyses, such as KTS Law, acceptable verification options include traditional and digital formats. These may be:

- Signed consent forms submitted by mail, fax, or electronic upload;

- Toll-free telephone calls where a trained operator verifies parental approval;

- Video conferencing sessions between the parent and platform representatives;

- Use of government-issued identification in combination with a signed consent statement;

- Verified email consent, provided it is followed by additional steps (such as delayed access or follow-up verification) to confirm authenticity.

The law aligns with the federal Children’s Online Privacy Protection Act (COPPA) in its structure, but Georgia’s requirements apply more broadly to platforms serving minors—not just those collecting personal data. As noted in coverage from 13WMAZ, this represents a more proactive stance in online child safety.

For websites that provide access to adult content, the requirements are even more stringent. These platforms must implement age verification systems that meet the National Institute of Standards and Technology’s Identity Assurance Level 2 (NIST IAL2). This high-assurance standard typically involves biometric identity matching, such as facial recognition linked to government-issued IDs, or in-person verification by a trusted identity service provider. The goal is to ensure that no underage user can access material deemed harmful to minors, even if they attempt to bypass consent requirements.

These standards not only help platforms comply with Georgia’s law but may also prepare them for similar regulations emerging in other states. By adopting rigorous consent and identity practices now, businesses can safeguard themselves against both legal risk and reputational harm.

Georgia vs Other States: How Laws Compare

| State | Key Provisions | Legal Status |

| Georgia | Requires age verification for all social media accounts and harmful content sites; mandates parental consent for users under 16. | Scheduled to take effect July 1, 2025. |

| Arkansas | Requires parental consent for minors to create accounts on certain large social media platforms. | Law was blocked by a federal judge due to First Amendment concerns. |

| California | Passed the Age-Appropriate Design Code Act, focused on default privacy settings and data minimization for children under 18. | Signed into law in 2022, but faced legal challenges regarding constitutionality. |

| Texas | Enforces strict age verification for adult content; mandates ID checks aligned with biometric standards like IAL2. | In effect since 2023; enforcement efforts ongoing. |

| Ohio | Enacted social media parental consent law similar to Georgia’s. | Blocked by a federal judge over constitutional concerns. |

Legal Pushback and Public Debate in Georgia

SB 351 has sparked legal and public debates:

Age Verification Lawsuits: NetChoice, representing major tech companies, filed a lawsuit challenging the law’s constitutionality, citing First and Fourteenth Amendment violations.

Privacy Concerns: Critics argue that the law’s requirements could infringe on user privacy and free speech rights.

Supporters’ View: Proponents believe the law is essential for protecting minors from online harms.

What Should Businesses Do Next?

With Georgia’s SB 351 coming into force on July 1, 2025, businesses should act swiftly to align their platforms with the law’s requirements. Key next steps include:

Assess Compliance: Review how your platform handles user registration, age verification, and parental consent for users under 16.

Adopt Age Verification Solutions: Implement reliable and privacy-conscious tools.

Ondato’s AI-based Age Estimation enables instant, non-intrusive age checks using facial analysis. For long-term usability, OnAge provides a reusable age verification credential, allowing users to verify once and reuse their verified status.

Update Internal and Public Policies: Ensure your privacy policies, terms of service, and user flows reflect the law’s age and consent requirements.

Train Your Team: Equip legal, engineering, and support staff with the knowledge to implement, monitor, and enforce compliance protocols.

Getting ahead of the curve now not only helps avoid penalties, it also builds trust with users and prepares your business for a broader regulatory shift across the U.S.